Numerical Methods -- Solutions to Linear system of Equations -- Module 4

Index

- Gaussian Elimination method to find the solution for a system of linear equations

- Gauss-Jordan Elimination Method

- The Matrix Inversion method.

- Pre-requisites before using this method (must) (skip ahead if you already know these).

- 1. The Determinant of a 2x2 matrix.

- 2. Determinant of a 3x3 matrix

- 3. Transpose of a matrix.

- 4. Inverse of a 2x2 matrix.

- 5. Inverse of a 3x3 matrix

- Method 1 Using Gauss-Jordan Elimination

- Method 2 Using the method of matrix of minors, co-factors and adjugate matrix.

- Finding the solution to a system of equations using the matrix inversion method.

- LU Factorization Method

- 1. Finding

- 2. Finding

- 3. Solving a system of equations using forward and backward substitution of

- Gauss-Seidel Method

Gaussian Elimination method to find the solution for a system of linear equations

This method converts a system of equations into an upper-triangular form (where all entries below the main diagonal are zero) so that you can then solve for the unknowns via back substitution.

https://www.youtube.com/watch?v=eDb6iugi6Uk

Example 1

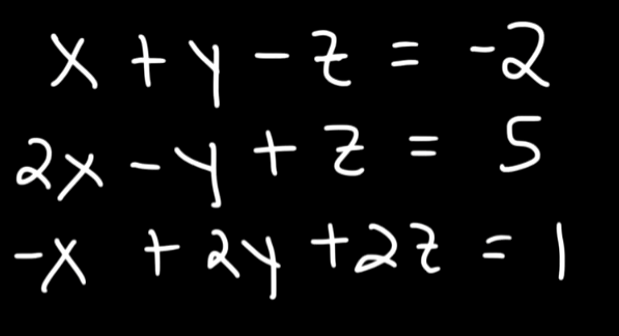

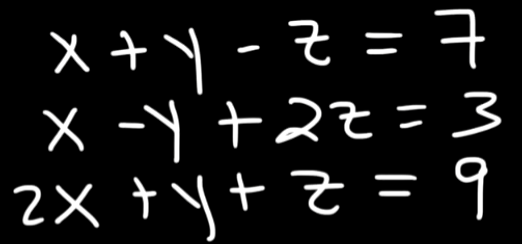

So let's say we have this system of linear equations here

So first of what we will do is construct an augmented matrix using the coefficients of the equations.

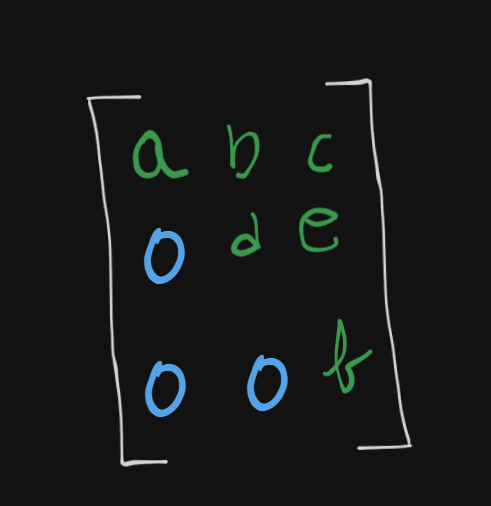

Now what we need to do is to convert this to a row-echelon form.

The row-echelon form of this matrix should be something like this :

Note that the bottom left half elements below the diagonal line are all zeroes. (Only works for perfect square matrices)

(Image generated by the new version of ChatGPT, not bad indeed.)

So with this matrix, let's call it

How do we convert it to a row-echelon form?

It's done by doing something called a matrix transformation via matrix row operations.

So first of all we select a pivot element. A pivot element is the first non-zero element we encounter in a matrix.

In

Now all our operations will be centered around

So we can do $$R_3 \rightarrow R_1 \ + \ R_3 $$

So the matrix becomes :

Remember that when we add two rows, all elements of the rows are affected.

We can then try :

Then we can do :

We are already there

This can be done by simply for

We have already reached the row-echelon form, but,

We can further simplify the matrix by performing an operation on

Now we can convert the matrix back to equations as :

Now with some simple substitution :

Thus :

Therefore :

So we have the solution for the system of equations as:

Summary:

Always use the format:

where :

For example if we have the rows :

-

Pivot Row (Row 1):

Here, if you want to eliminate the

-variable, the pivot coefficient is 2. -

Target Row (Row 2):

The target coefficient for the

-variable in Row 2 is .****

Example 2

So we can write the matrix as:

Here we will select the pivot element as 2.

So all our operations will be centered around

So to solve remove the coefficients of

So the target row is

So

So $$R_3 \ \to \ R_3 \ - \ m \ \times R_1 \ \implies \ R_3 \ \to \ R_3 \ - \ \frac{2}{2} \ \times R_1 \ \implies R_3 \ - \ R_1$$

So

Therefore the matrix becomes :

Now for Row 2, we have :

Thus Row 2 will be :

Therefore the matrix now becomes :

Now let's remove the

So our pivot component for

But why change pivots now?

The reason is that each pivot is used to clear the entries below it in its column.

The way pivots are chosen are that they are taken from a column which hasn't been used for a pivot, yet.

So :

Therefore

So

Thus,

So the matrix will become :

Now let's convert this matrix back to a system of equations :

So we get :

And finally :

Thus :

Gauss-Jordan Elimination Method

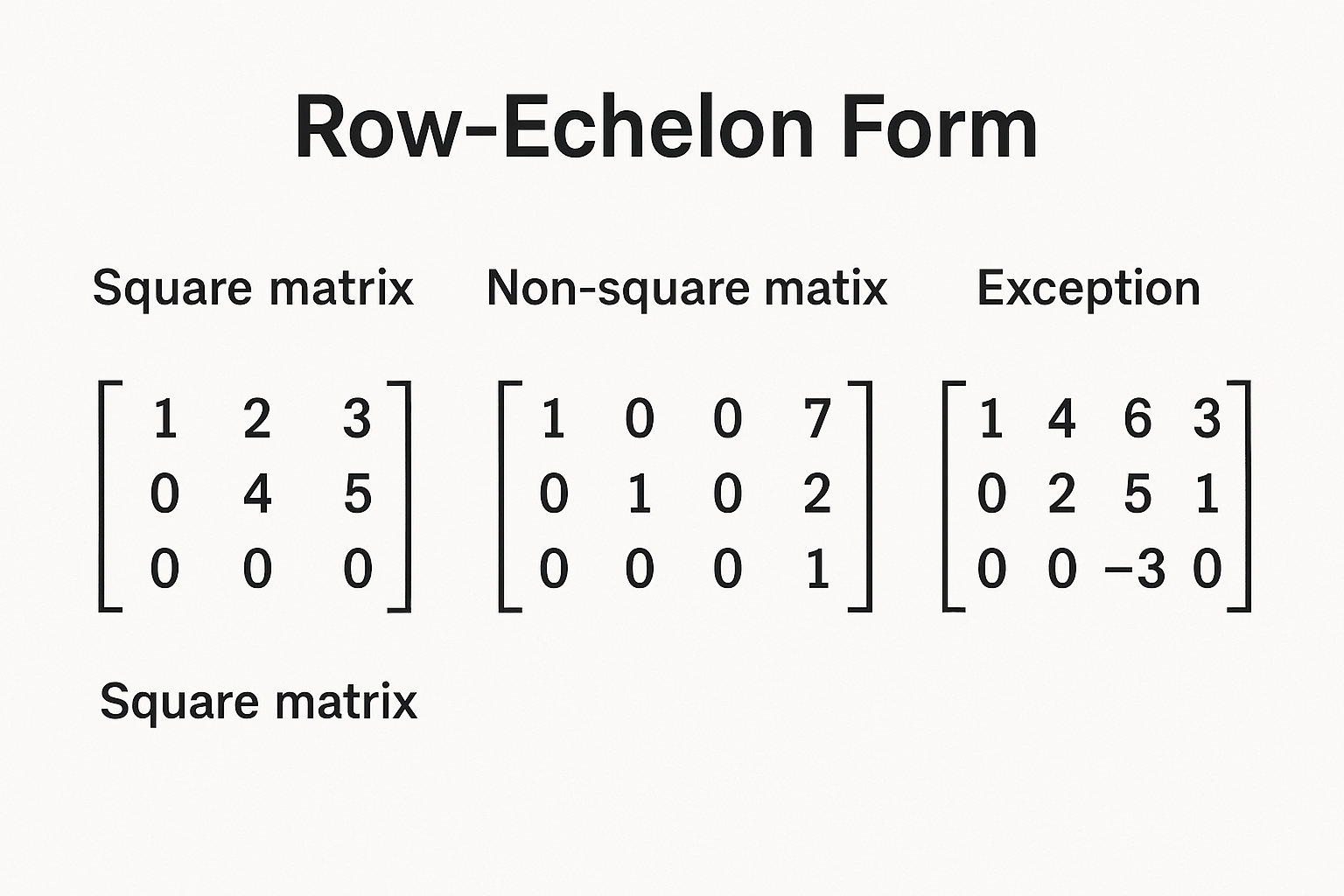

This is similar to the previous method, except here we reduce the matrix even further, to something called a "reduced row echelon form".

https://www.youtube.com/watch?v=eYSASx8_nyg

Now this, is the reduced row echelon form.

where

The identity matrix is :

where the diagonal elements of the matrix are 1 and all the other elements are zero.

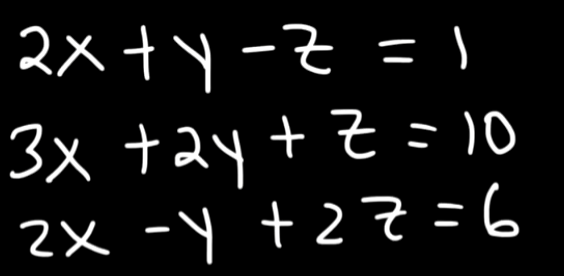

So let's say we have this system of equations here:

We will solve them in the same manner as we did before, only that there will be a few more operations now.

So let's convert this system of equations to an augmented matrix first.

Let's set the pivot element to 1 for the

So the target row is

Thus,

So,

So the matrix becomes :

Now the target is

So:

So the matrix becomes:

Now we set the pivot element to -2 for the

Thus,

So :

So, the matrix now becomes :

Now for the

So:

Note: If we instead tried to set

So,

So, the matrix becomes :

Now for

So :

Thus the matrix becomes :

Now, for the

Forward Elimination vs. Backward Elimination

-

Forward Elimination:

-

Key Point: Once a pivot is chosen in a column, it is used to clear entries downward. You do not worry about entries above because those rows haven't been processed yet.

We did this when we were processing the elements below the diagonal line.

-

-

Backward Elimination (or Back Substitution for RREF):

Once the matrix is in row-echelon form (all entries below each pivot are zero), you perform backward elimination to clear entries above each pivot. This step ensures that each pivot is the only nonzero entry in its column.We are now about to do this with the

component of . -

Why This is Valid:

-

The forward phase ensures that each pivot has zeros below it.

-

The backward phase then uses each pivot to clear above it.

-

Even though the pivot in Row 2 was already used to clear entries below (in Row 3), it must also clear any nonzero entry above it (in Row 1) to satisfy the RREF condition (each pivot column has zeros everywhere except for the pivot itself).

-

-

So we can safely select -2 as the pivot.

So :

Before that we scale

Now

So, proceeding with

So, the final matrix becomes :

Now we can easily convert them back to equations and get the values of

So,

So the solutions are :

The Matrix Inversion method.

Let's walk through the matrix inversion method step by step. The idea is that if you have a system of linear equations in the form

and the coefficient matrix

Pre-requisites before using this method (must) (skip ahead if you already know these).

- Knowing how to find out the determinants of 2x2 and 3x3 matrix. (https://www.youtube.com/watch?v=3ROzG6n4yMc)

- Transpose of a matrix.

- Knowing how to find the inverses of 2x3 and 3x3 matrix. (https://www.youtube.com/watch?v=aiBgjz5xbyg) (2x2) and (https://www.youtube.com/watch?v=Fg7_mv3izR0) (3x3)

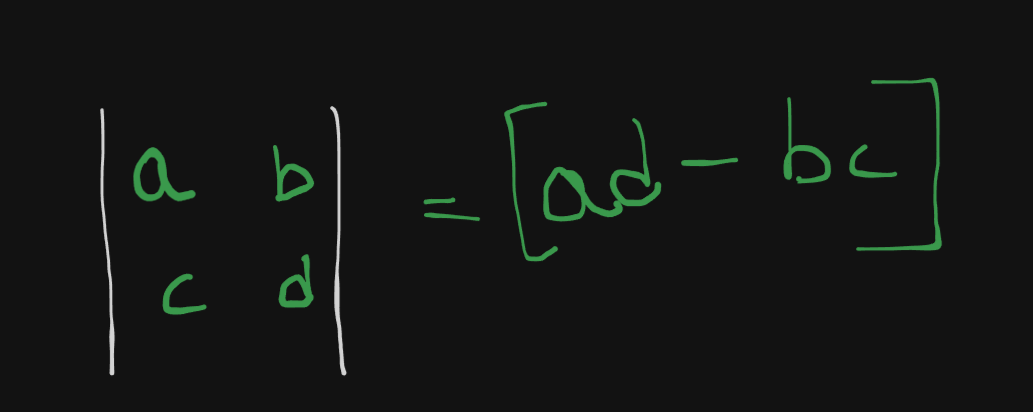

1. The Determinant of a 2x2 matrix.

The determinant of a 2x2 matrix is given by :

Let's say we have this example :

So the determinant of this matrix will be:

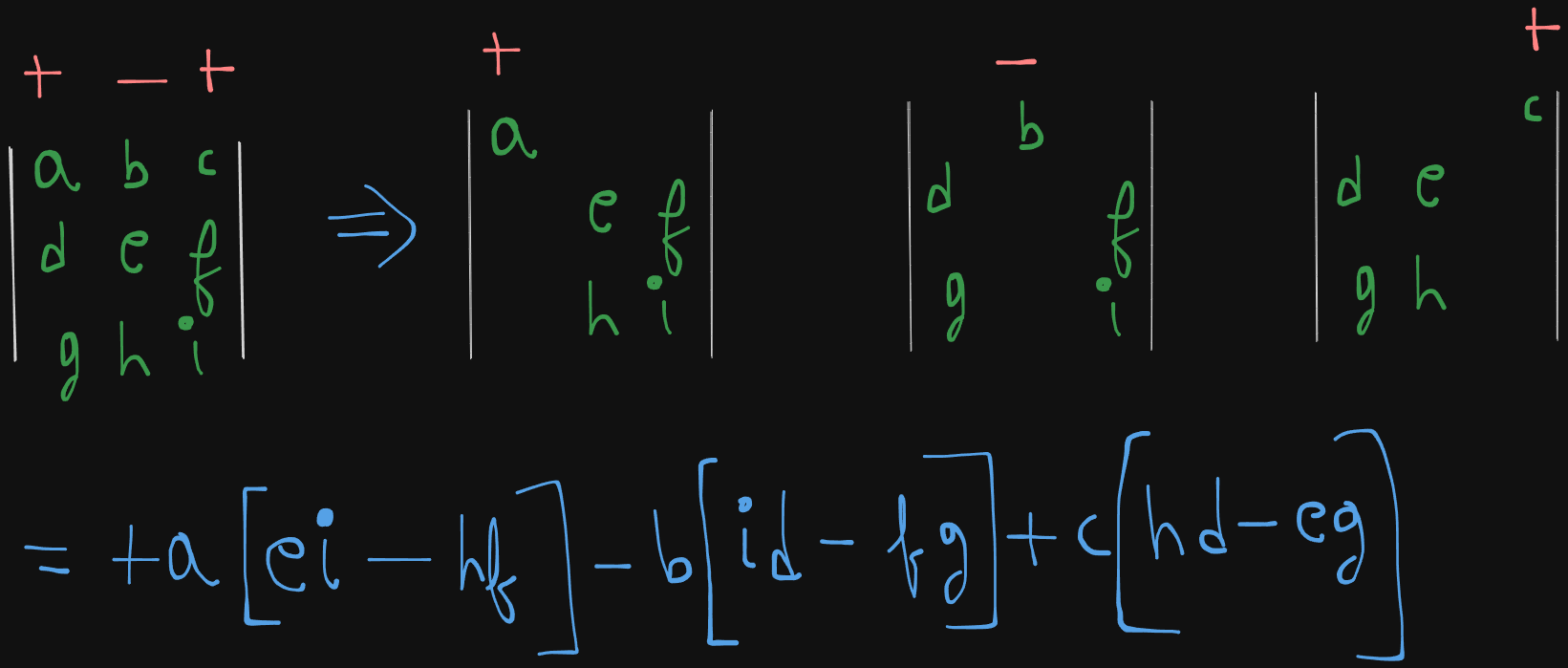

2. Determinant of a 3x3 matrix

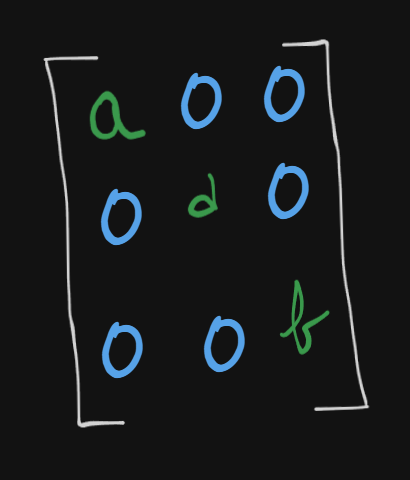

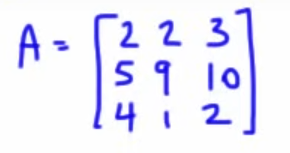

Let's say we have a 3x3 matrix here

The determinant is given by :

which evaluates to :

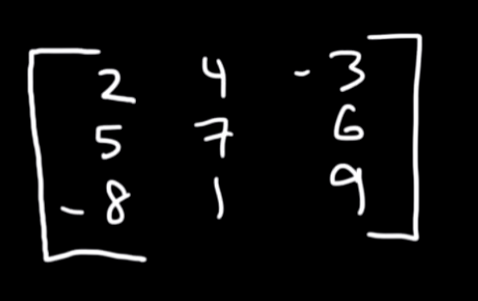

So let's say we have this example here :

The determinant of this 3x3 matrix will be:

3. Transpose of a matrix.

The transpose of a matrix is just it's rows and columns interchanged.

The transpose of this matrix will be :

Same for a 2x2 matrix :

The transpose will be:

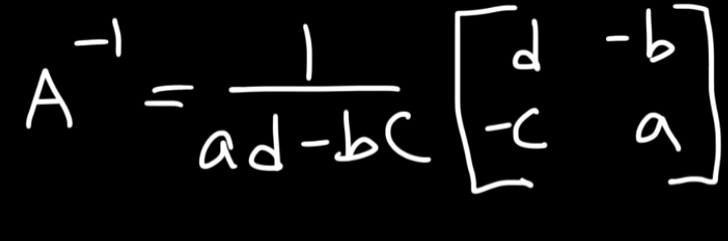

4. Inverse of a 2x2 matrix.

Note: Before trying to invert a matrix, you must first verify if the matrix is invertible or not.

This is done by finding the determinant of the matrix and checking whether it's zero or not.

If the determinant is zero, the matrix is not invertible, else the matrix is invertible.

So if we have a 2x2 matrix

The inverse is given by :

where $$\frac{1}{ad \ - \ bc}$$

is the determinant of the 2x2 matrix.

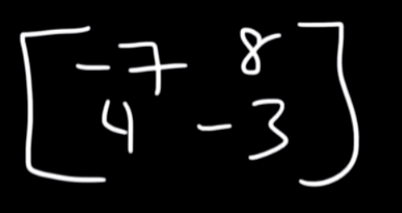

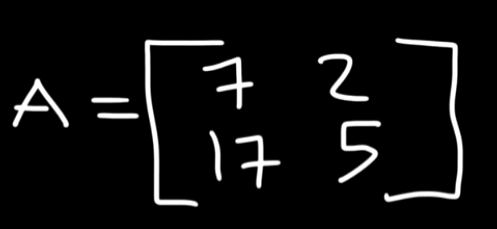

So let's say we have this matrix example.

So starting off, let's find the determinant of this matrix.

Now to find the inverse of this matrix :

or

5. Inverse of a 3x3 matrix

Now there are two ways to find the inverse of a 3x3 matrix.

Method 1 : Using Gauss-Jordan Elimination

One way is to use matrix row operations and using the method of Gauss-Jordan elimination to find the inverse of the 3x3 matrix.

https://www.youtube.com/watch?v=Fg7_mv3izR0

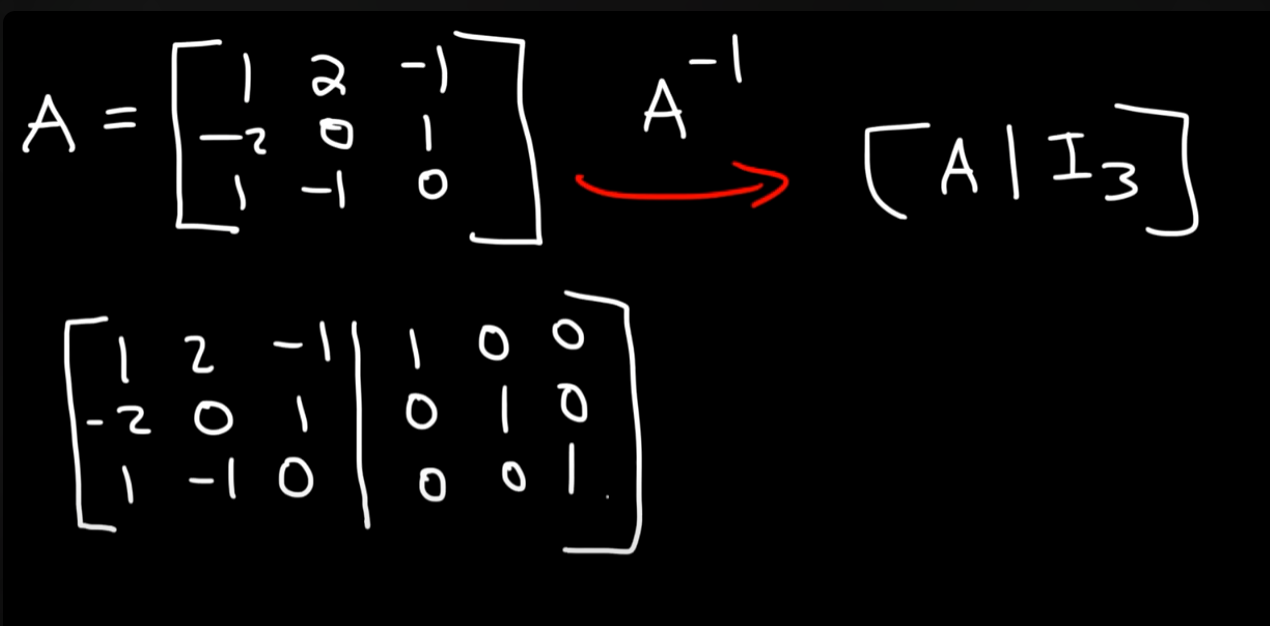

So if you have this matrix for example :

We write it out as in the picture, by augmenting the matrix with the identity matrix.

Then the goal is to perform row operations such that the identity matrix (reduced row echelon form) is on the LHS and on the RHS we get the inverted matrix.

The procedure is the same as Gauss-Jordan elimination, so if you want to see how that's done you can either watch the video in the link or just scroll up to the section of Gauss-Jordan elimination.

Method 2: Using the method of matrix of minors, co-factors and adjugate matrix.

This method is a much less time consuming one that the Gauss-Jordan elimination method.

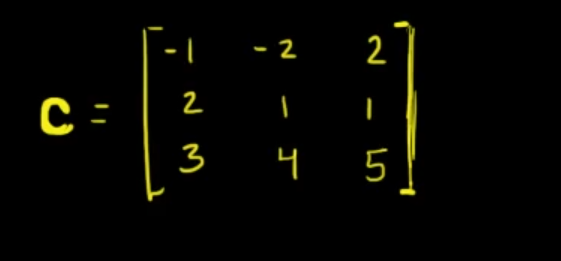

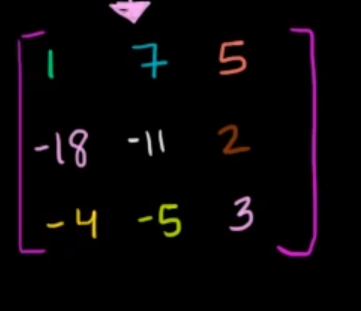

So we start off with a 3x3 matrix

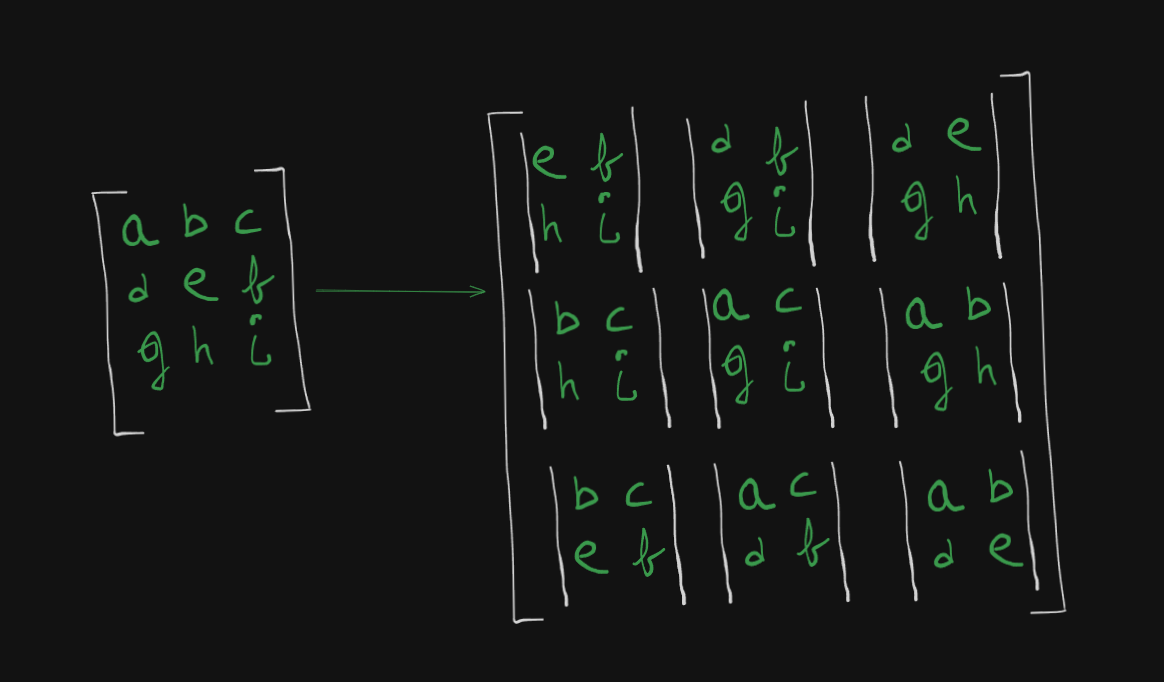

We will create something called a "matrix of minors".

The matrix of minors is of this format:

which is basically :

- Choose an element.

- Hide the row and column in which that element is.

- Write the remaining elements in their existing order within a determinant format as singular element of a larger matrix.

- Keep repeating till all the elements in the original matrix, have been chosen.

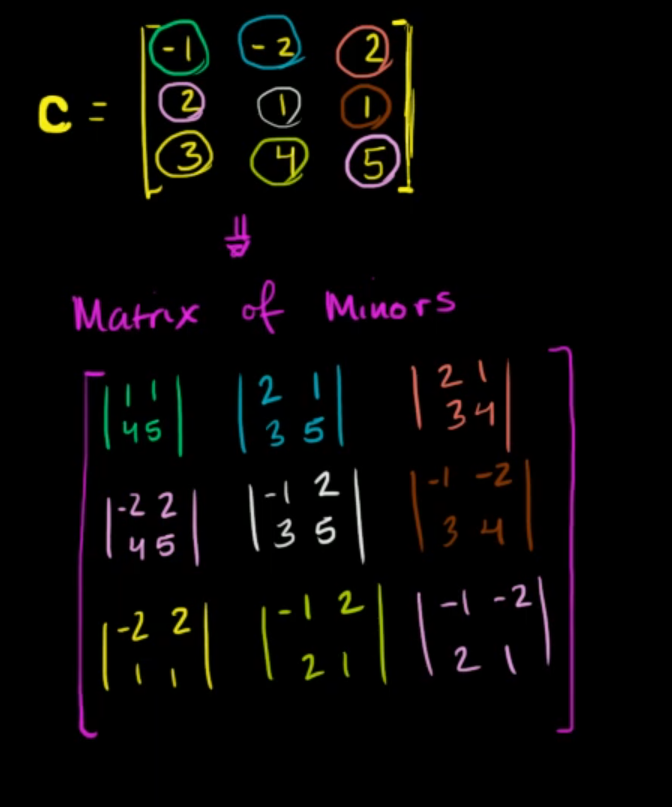

So with our example matrix it will be :

Now after solving the individual determinants :

This is the matrix of minors.

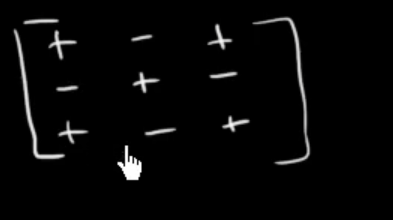

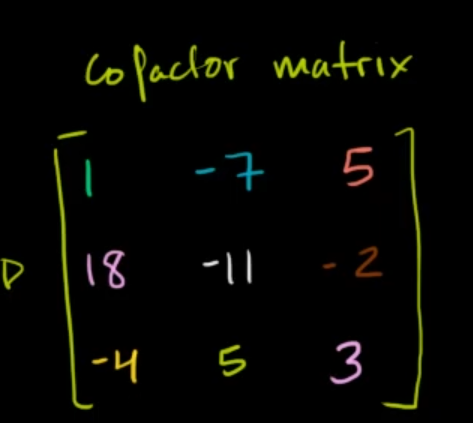

Next up is the co-factor matrix.

Simply apply this sign pattern (we see this pattern during the determinant of a 3x3 matrix) :

to the elements of the matrix of minors.

Which now becomes the Co-Factor matrix.

Now we are halfway there!

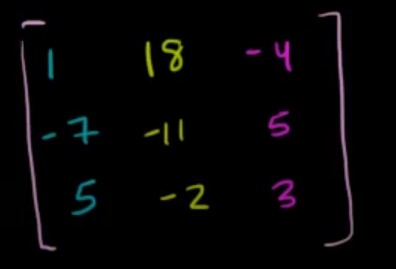

Next up is the adjugate matrix.

The adjugate matrix is just the transpose of the Co-Factor matrix (rows and columns interchanged).

So the final inverse of our 3x3 matrix is the product of the determinant times the adjugate matrix

So we already know how to find the determinant of a 3x3 matrix, so I will be skipping that here

The determinant of this matrix is :

So the final inverse is :

which will result in :

Finding the solution to a system of equations using the matrix inversion method.

So now that we have all the pre-requisites out of the way we can proceed with the matrix inversion method of finding the solution to a system of equations.

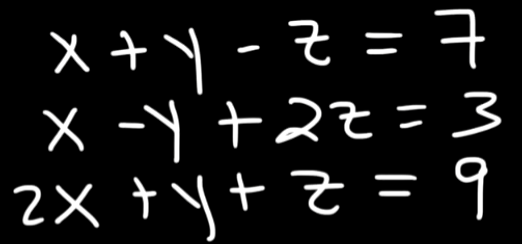

Let's proceed with the example we used in the Gauss-Jordan elimination method, better to proceed with a known problem as an example.

So we have the system of equations

So let's convert this system of equations to an augmented matrix first.

However here we will do two things.

We split the augmented matrix into two separate matrices.

One will be the coefficient matrix, let's call it

And the variables matrix, let's call it

And the other one will be the constant matrix, let's call it

Now we need to find :

We will try to invert the coefficient matrix

So let's find out the determinant first:

Since

So I will proceed with the second method here, as it's much faster than doing row operations.

Starting with the matrix of minors :

will be:

(That was quite painful to type and render, but atleast faster than drawing with a mouse).

Now we solve the individual determinants :

So this is the matrix of minors.

Now we apply the sign scheme to get the co-factor matrix:

Now we find the adjugate matrix by transposing the co-factor matrix :

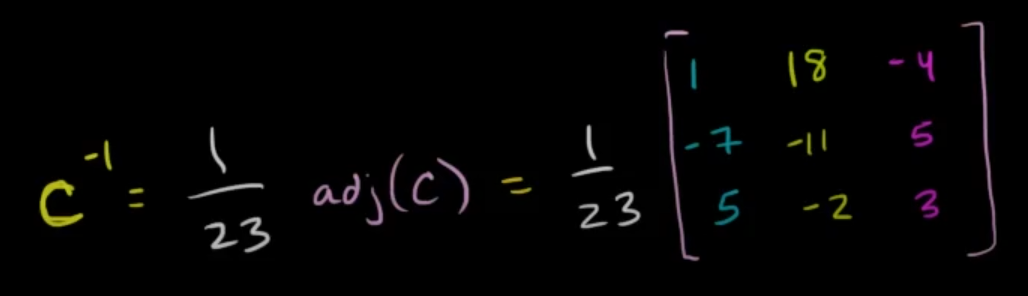

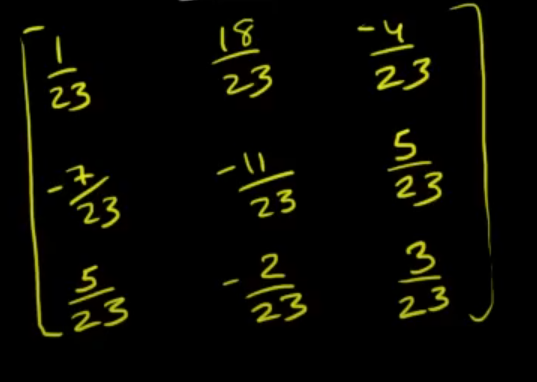

Now we compute the inverse of the matrix :

which will be :

Now using the formula :

we can find out the value of

So :

For

Similarly :

And lastly :

So we get a unique solution this time as :

LU Factorization Method

https://www.youtube.com/watch?v=dwu2A3-lTGU&list=PLU6SqdYcYsfLrTna7UuaVfGZYkNo0cpVC&index=20

https://www.youtube.com/watch?v=a9S6MMcqxr4 (A better explanation of how to find the

https://www.youtube.com/watch?v=Tzrg0GgEfZY (continuation, shows how to find the solution of a system of equations using the

The LU factorization method is an iterative method, that is the solving is carried out in iterations.

LU factorization is especially useful when you need to solve multiple systems with the same coefficient matrix

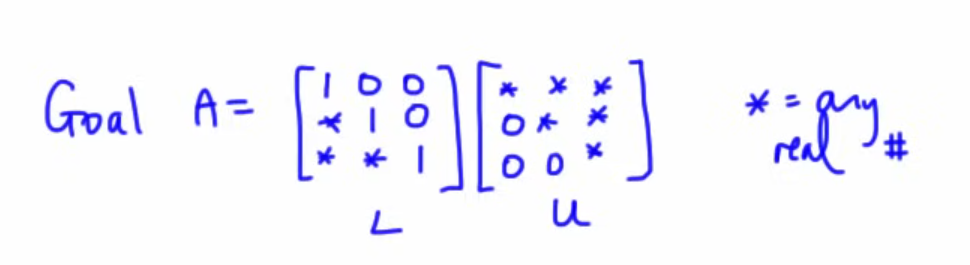

The idea behind LU factorization is to write the coefficient matrix

Once we have this factorization, we solve the system:

by first solving

which is forward substitution

and then solving :

which is backward substitution

So let's work first on understanding how to find :

with an example here.

So with this example matrix

(the

or

Note that the matrix

1. Finding

So we start by finding

That can be done by simply using Gauss elimination method.

We set the pivot to

So for

So $$R_2 \ \to R_2 \ - \ \frac{5}{2} \ R_1$$

So the matrix becomes :

Now for

So $$R_3 \ = \ R_3 \ - \ 2R_1$$

So the matrix becomes:

Now for the

So :

Then :

So the matrix

2. Finding

Now the matrix

The upper triangular half of

Now the lower triangular half, which represents the

So we currently have

Now we will just fill in the gaps using the

So, for the

Next, for the

And lastly, for the

And this the

3. Solving a system of equations using forward and backward substitution of

So, now we know how to find :

Now let's proceed to solving a system of equations with forward and backward substitution.

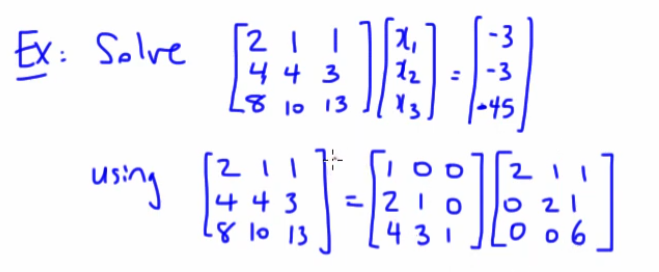

Let's use a new example for this part:

This time we have already been given

Now by default we have the system of equations as :

So :

And since

Now to simplify things, let

So now we will be solving :

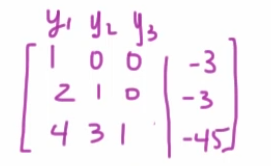

which will look like this :

So we will get :

So we have :

So

Now we go back to this part that :

So we will use this equation to find

Therefore :

So :

And lastly :

So :

Now since :

We have our solution to the system of equations as:

Example 2

Let's try out with a known example from our previous sections to see if we can get the same solution or not with the LU factorization method.

So we have these three equations again.

Let's represent them in the matrix format.

One will be the coefficient matrix, let's call it

And the variables matrix, let's call it

And the other one will be the constant matrix, let's call it

Step 1. Finding

We will perform Gauss-elimination on the coefficient matrix to row-echelon form, which will be

Since this a known matrix which we have used in our previous examples, I will just skip the row-operations part.

or

Now this is

Step 2. Finding

The

The

1

2

So the

Step 3. Performing forward and backward substitution to solve the system of equations.

Now we set :

where

So we will first solve :

So :

Thus :

Next we perform back substitution to find :

So :

So :

Thus, we have :

Therefore we have the solution to the system of equations as :

which is the same as the one we found before.

So our answer and this approach to the LU method is correct.

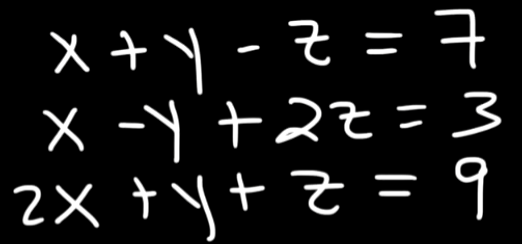

Gauss-Seidel Method

The Gauss–Seidel method is an iterative procedure that updates each variable in sequence using the most recent values from the previous updates. The general idea is to rewrite each equation in the form :

Now that we have values in terms of all three variables. We can start iterations on this and try to approximate the values of

Now for the approximation to be as accurate as possible, the system of equations must converge.

For a convergence to take place the system of equations must be diagonally dominant.

We can check whether a system is diagonally dominant or not by creating the coefficient matrix :

Now, we can check for diagonal dominance as follows :

- Row 1: $$|1| \geq \ |1| \ + \ |-1| \ = \ 1 \ + \ 1 \ = 2$$

Here we see that the selected diagonal element is not greater than the sum of the remaining elements in it's row, so it's not diagonally dominant.

Similarly :

- Row 2: $$|-1| \ \geq \ |1| \ + \ |2| \ = \ 3 $$

Again the selected diagonal element is less than the sum of the remaining elements in it's row, so it's not diagonally dominant.

Lastly :

- Row 3: $$\ |1| \ \geq \ |2| \ + \ |1| \ = \ 3$$

Once again the selected diagonal element is less than the sum of the remaining elements in it's row, so it's not diagonally dominant.

Since the matrix is not strictly diagonally dominant, the Gauss–Seidel method may not converge for this system.

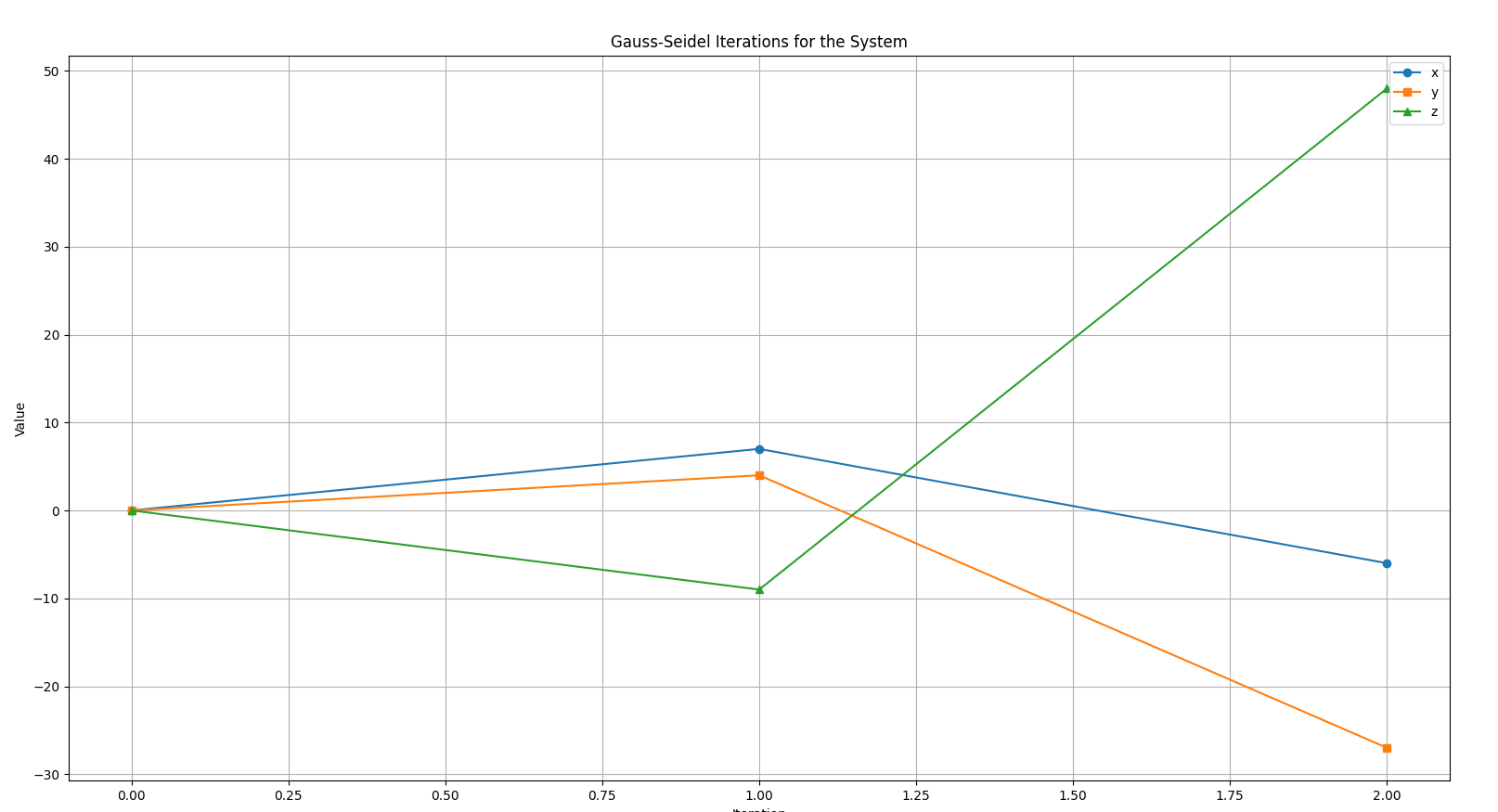

Anyways, we will start with the iterations to see where it leads us :

Starting initially :

and we assume all three to be zero.

So :

Now in the first iteration, we get the values of

See that we immediately used the updated value of

Now in the second iteration :

So let's do a check to see if the system is converging or diverging.

We can already observe that the system is starting to spread out (diverge) at iteration 2 itself, due to a lack of diagonal dominance.

So we can see that in iteration one the system was at :

In the second iteration we have the system at :

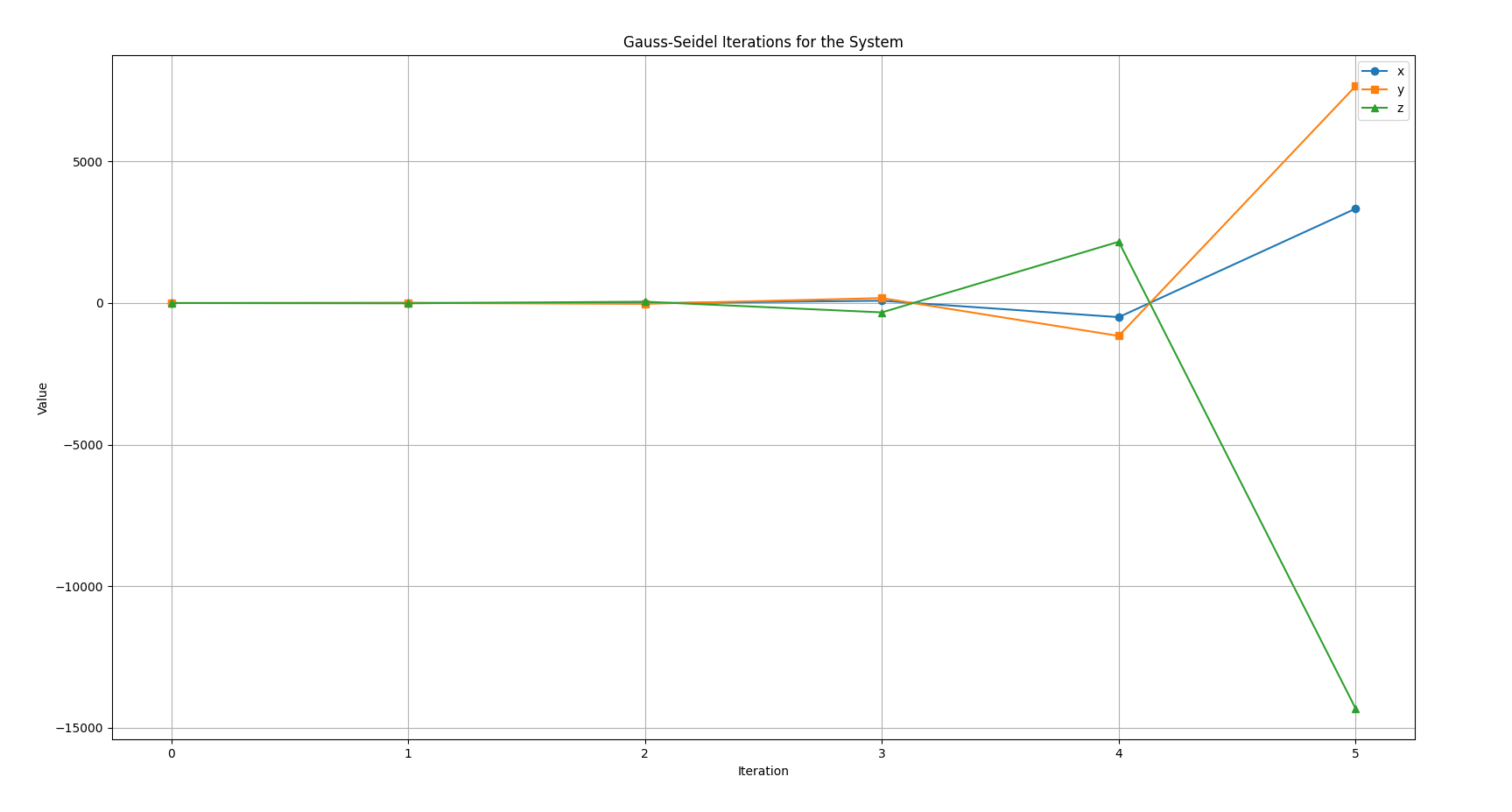

If we continue for 3 more iterations, let's see where this leads us.

Iteration 3:

Iteration 4 :

Iteration 5:

So we can see the values have skyrocketed too much due to lack of diagonal dominance and subsequent divergence :

So in this case, the Gauss-Seidel method is not applicable for a system of equations that is not diagonally dominant as it diverges too much from it's solution :

https://www.youtube.com/watch?v=gxy6VI1hEfs&list=PLU6SqdYcYsfLrTna7UuaVfGZYkNo0cpVC&index=26

This video has another example that is diagonally dominant, and in that case the Gauss-Seidel method works and we get an accurate approximation after 4-5 iterations, so you can try that example out.